Conformers, Convolution-augmented Transformer for Automatic Speech Recognition

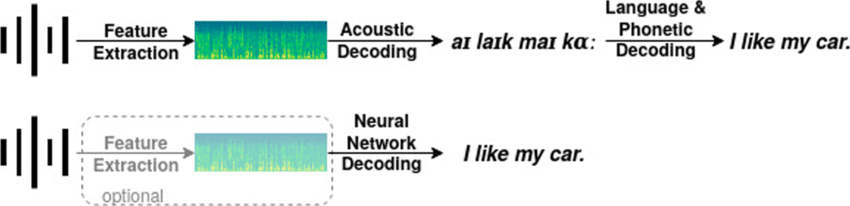

Automatic Speech Recognition (ASR) has revolutionized human-computer interaction, enabling seamless communication through voice. Traditional ASR pipelines typically have three main components: feature extraction, acoustic modelling, and language modelling. Feature extraction converts raw audio signals into compact representations such as Mel-frequency cepstral coefficients (MFCCs). The acoustic model, often based on Hidden Markov Models (HMMs) or deep learning methods, maps these features to phonemes. Finally, the language model predicts word sequences by leveraging probabilistic or neural approaches, ensuring coherent transcription. The advent of deep learning introduced RNNs, and later, Transformers revolutionized the field with their ability to capture long-range dependencies.

Despite its advancements, ASR systems still face challenges in effectively capturing local and global speech dependencies. Convolution-augmented Transformers, or Conformers, have emerged as a cutting-edge solution, combining the strengths of convolutional networks and Transformers to enhance ASR performance. This blog explores Conformers’ evolution, architecture, and impact in transforming speech recognition technologies.

Challenges in ASR

- Noise Robustness: Background sounds, echoes, and auditory disturbances can disrupt transcription quality.

- Example: In a noisy café, traditional ASR systems might transcribe speech inaccurately due to overlapping sounds.

- Speaker Variability: Variations in accents, dialects, and speech rates lead to inconsistent performance.

- Example: A system trained on American English may struggle with Scottish or Indian accents.

- Local Features: Traditional models like RNNs effectively capture short-term dependencies but lack scalability.

- Example: Understanding phoneme transitions in a single word.

- Global Features: Transformers excel at modeling long-range relationships but may miss fine-grained details.

- Example: Identifying sentence context across multiple words.

What are Conformers?

Conformers integrate convolutional modules with Transformers, leveraging both strengths. Convolutions capture local features, while self-attention (Transformer) handles global dependencies. Traditional Transformers rely solely on self-attention, which can be computationally expensive. Conformers enhance efficiency by incorporating convolutional operations, making them better suited for ASR tasks.

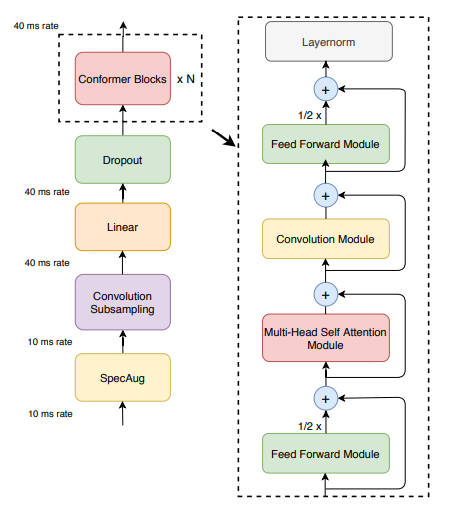

Architecture of Conformers

Conformer Encoder Architecture

class ConformerBlock(nn.Module):

def __init__(

self,

d_model,

num_heads,

ff_expansion_factor=4,

conv_kernel_size=31,

dropout=0.1,

):

super().__init__()

self.ffm1 = FeedForwardModule(d_model, ff_expansion_factor, dropout)

self.mha = MultiHeadSelfAttentionModule(d_model, num_heads, dropout)

self.conv = ConvolutionModule(d_model, conv_kernel_size, dropout)

self.ffm2 = FeedForwardModule(d_model, ff_expansion_factor, dropout)

self.layer_norm = nn.LayerNorm(d_model)

def forward(self, x):

x = x + 0.5 * self.ffm1(x)

x = x + self.mha(x)

x = x + self.conv(x)

x = x + 0.5 * self.ffm2(x)

return self.layer_norm(x)

class ConformerEncoder(nn.Module):

def __init__(

self,

input_dim,

num_layers,

d_model,

num_heads,

ff_expansion_factor=4,

conv_kernel_size=31,

dropout=0.1,

):

super().__init__()

self.input_proj = nn.Linear(input_dim, d_model)

self.layers = nn.ModuleList(

[

ConformerBlock(

d_model, num_heads, ff_expansion_factor, conv_kernel_size, dropout

)

for _ in range(num_layers)

]

)

self.layer_norm = nn.LayerNorm(d_model)

def forward(self, x):

# Input x shape: (batch_size, seq_len, input_dim)

x = self.input_proj(x)

for layer in self.layers:

x = layer(x)

return self.layer_norm(x)

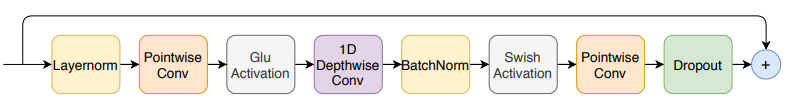

Convolution Module

class ConvolutionModule(nn.Module):

def __init__(self, d_model, kernel_size=31, dropout=0.1):

super().__init__()

self.layer_norm = nn.LayerNorm(d_model)

self.pointwise_conv1 = nn.Conv1d(d_model, 2 * d_model, kernel_size=1)

self.glu = nn.GLU(dim=1)

self.depthwise_conv = nn.Conv1d(

d_model,

d_model,

kernel_size=kernel_size,

groups=d_model,

padding=kernel_size // 2,

)

self.batch_norm = nn.BatchNorm1d(d_model)

self.pointwise_conv2 = nn.Conv1d(d_model, d_model, kernel_size=1)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

# Input x shape: (batch_size, seq_len, d_model)

residual = x

x = self.layer_norm(x)

x = x.transpose(1, 2) # Shape: (batch_size, d_model, seq_len)

x = self.pointwise_conv1(x)

x = self.glu(x)

x = self.depthwise_conv(x)

x = self.batch_norm(x)

x = self.pointwise_conv2(x)

x = self.dropout(x)

x = x.transpose(1, 2) # Shape: (batch_size, seq_len, d_model)

return residual + x

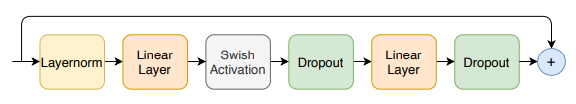

Feed Forward Module

class Swish(nn.Module):

def forward(self, x):

return x * torch.sigmoid(x)

class FeedForwardModule(nn.Module):

def __init__(self, d_model, ff_expansion_factor=4, dropout=0.1):

super().__init__()

self.fc1 = nn.Linear(d_model, d_model * ff_expansion_factor)

self.fc2 = nn.Linear(d_model * ff_expansion_factor, d_model)

self.dropout = nn.Dropout(dropout)

self.activation = Swish()

def forward(self, x):

out = self.fc1(x)

out = self.activation(out)

out = self.dropout(out)

out = self.fc2(out)

return self.dropout(out)

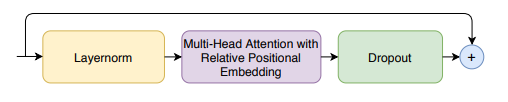

Multi-Headed Self-Attention Module

class MultiHeadSelfAttentionModule(nn.Module):

def __init__(self, d_model, num_heads, dropout=0.1):

super().__init__()

self.mha = nn.MultiheadAttention(d_model, num_heads, dropout=dropout)

self.dropout = nn.Dropout(dropout)

self.layer_norm = nn.LayerNorm(d_model)

def forward(self, x):

residual = x

out, _ = self.mha(x, x, x)

out = self.dropout(out)

return self.layer_norm(residual + out)

How Conformers Improve ASR

Handling Local and Global Dependencies

Conformers excel in balancing the need to capture both local and global dependencies, which are critical for effective ASR. The convolutional modules focus on extracting local features like phonemes and syllable structures, while the self-attention mechanism ensures that the model captures the broader context, such as sentence structure and semantics.

Example: Imagine transcribing a sentence with complex word dependencies, like “The cat, despite being afraid, climbed the tall tree.” Conformers can handle both the word “afraid” influencing “cat” and the sequence “climbed the tall tree” representing the action.

Improved Efficiency and Accuracy

By incorporating convolutional layers, Conformers achieve a reduction in computational overhead while maintaining high accuracy. Convolutional operations are computationally less expensive than self-attention for local dependencies, allowing Conformers to process audio sequences more efficiently.

Example: Training on large datasets like Librispeech shows that Conformers can achieve a Word Error Rate (WER) of below 5% while utilizing fewer resources compared to standard Transformers.

Real-world Applications

Conformers have been adopted in a variety of applications, ranging from personal assistant devices like Amazon Alexa and Google Assistant to automated customer service systems. Their ability to handle noise and variability makes them ideal for real-time transcription.

Example: In healthcare, Conformers are used to transcribe doctor-patient conversations into medical notes, even in noisy environments like emergency rooms.

Conclusion

Conformers represent a pivotal leap forward in Automatic Speech Recognition (ASR) technology by seamlessly integrating convolutional and Transformer architectures. This unique hybrid design addresses key limitations of traditional ASR models, such as the inability to efficiently capture local and global dependencies, while also improving noise robustness and adaptability to diverse speaker profiles. Conformers deliver unprecedented accuracy and efficiency by combining the strengths of convolutional modules for local context and self-attention mechanisms for global understanding.

The adoption of Conformers in real-world applications, from healthcare transcription to virtual assistants, underscores their transformative potential. They have not only set a new performance benchmark with lower Word Error Rates (WER) but also paved the way for future advancements in multilingual and low-resource language processing. Conformers exemplify how cutting-edge architecture can redefine the capabilities of ASR systems, making human-machine interaction more seamless and accessible.

References

- Vaswani, A., et al. “Attention Is All You Need.” 2017.

- Gulati, A., et al. “Conformer: Convolution-augmented Transformer for Speech Recognition.” 2020.

- Relevant open-source repositories and datasets.